Neural Network

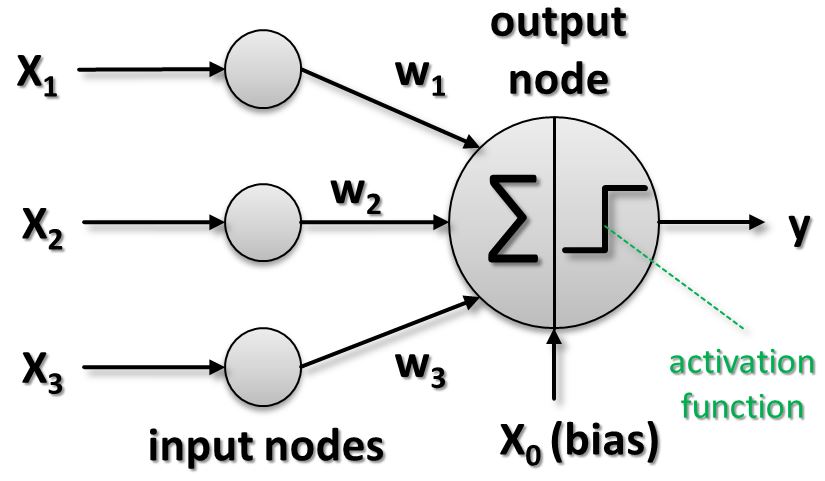

A neural network, more accurately referred to as Artificial Neural Network (ANN), is a quite complex data analysis technique. It is based on a well-defined architecture of many interconnected artificial neurons. But it also takes advantage of distinct learning algorithms that efficiently learn from data using this particular human inspired architecture. It is inspired by the human and named ‘artificial’ neuron because it is a simplified electronic version of a real human biological nerve cell called neuron. The human brain learns by changing the strength of the connection between neurons due to repeated stimulation by the same impulses. We refer to this strength with the term ‘weight’ of the connection links between different neurons. The artificial neuron is thus modelled in detail as the following so-called perceptron model.

All the different input nodes are associated with a weight indicating the importance of this particular Input. The weights are learned from algorithms like the ‘Perceptron Learning Algorithm’ in the case of a simple perceptron. But neural networks as shown below need more elaborate training algorithms, nevertheless the basic idea of a perceptron model remains. The weights of all inputs are summarized within the perceptron as the activiation value. This activation value then is used with a dedicated activiation function. Depending on the type of the activation function the result ‘fires’ or ‘not fires’ that in turn has a significant influence on the output y. In other words this is the inherent learning of a perceptron and below of the whole neural network.

Neural Network Architcture

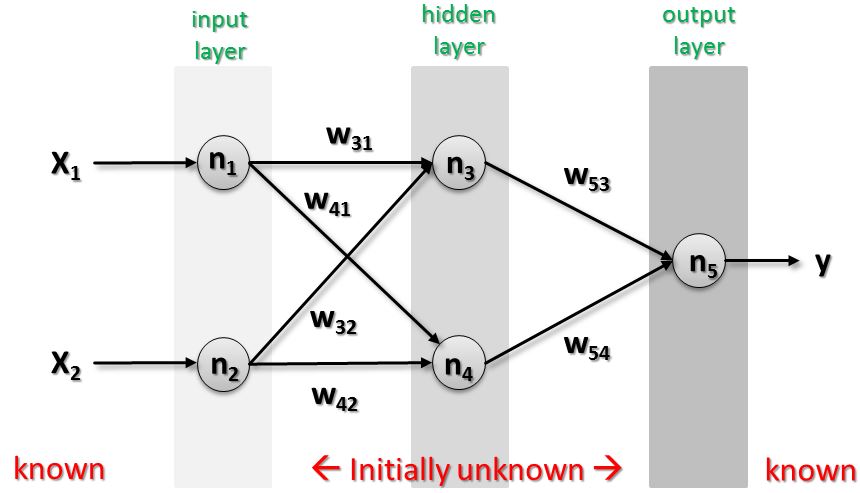

The number of artificial neurons used for a specific architecture depends on the given data analysis task. The architecture of an ANN is created through many different ways of interconnecting artificial neurons together as a network. In other words there is no one single architecture for each learning task and thus the detailed architecture is a choice of the user. The following example shows an ANN architecture with one Input layer, one hidden layer, and one output layer.

While many neural network architectures are possible, the figure above shows a simple yet powerful two-layer feed-forward neural network topology. Feed-forward neural Network means that nodes in one layer are connected only to the nodes in the next layer (a constraint of network construction or architecture). The Input vector X (here with x1 and x2) is known as their labels y are in a supervised classification task. Each hidden node in the figure can be viewed as a ‘simple perceptron’ that each creates one hyperplane (aka decision boundary). Think the output node simply combines the results of all the perceptrons to yield the overall ‘decision boundary’ to a classification problem.

Neural Network Applications

There is a wide variety of applications that take advantage of neural networks. The application area of ‘pattern recognition’ is most common. This means ANNs are very good at recognizing patterns for different given problems. One concrete example is facial recognition. Another example is character recognition.

Closely related to recognizing patterns is the field of applications known as ‘anomaly detection’. ANNs in this context are used to detect some problems with the pattern in the dataset used for training. In other words the ANN provides an output when there is something in the dataset that does not fit the previously trained pattern. When a ‘daily routine’ in some dataset is trained and then monitored, the ANN is used to alert the user that something is deviating from the daily routine.

In ‘signal processing’ applications ANNs are used as a filter when processing audio signals. In context of hearing aids they filter out noise and amplify important sounds.

More Information about a Neural Network

We recommend to watch the following video:

Follow us on Facebook: