Text Classification

Text classification is a machine learning technique that categorizes any form of text data. It is used to organize text data better by automating the process of analyzing line by line while categorizing the text data into different topics. These topics are often a predefined number of categories and text data in documents are assigned to none, one, or even multiple categories.

More recently challenges occur with the rapid growth of big data that leads to more online text information. Also large quantities of structured and unstructured text data becoming available in business and scientific organizations. But manually creating text classifiers based on the occurance of certain keywords in sourcecode can be very time-consuming. Instead the idea of text classification is to automatically learn from given examples. This in turn means it is a supervised learning problem.

Need for Feature Engineering

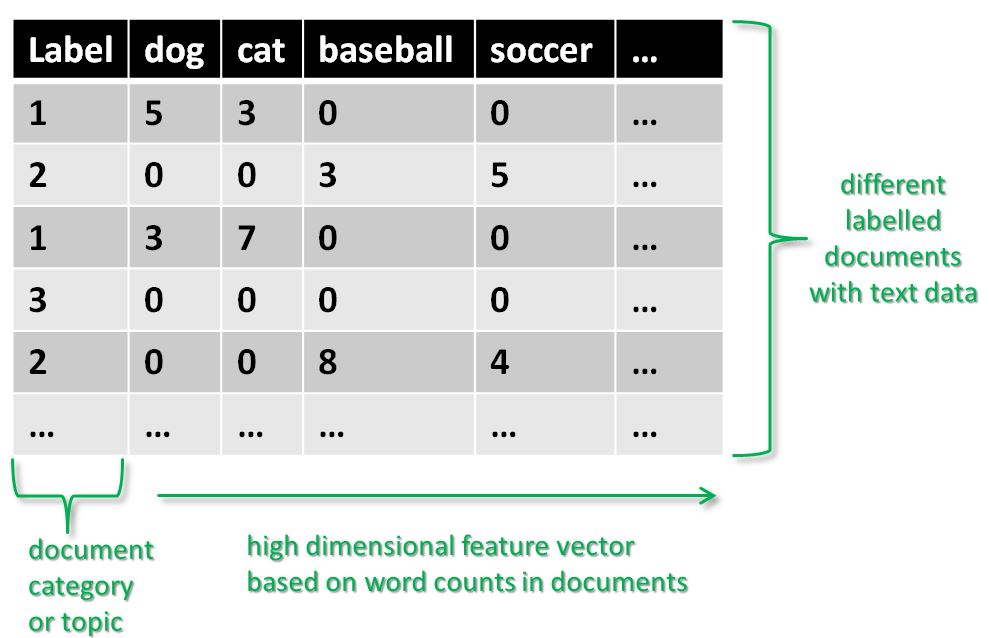

Text data in general and text documents in particular are basically many strings of characters. This pure text data needs to be transformed into features that can be used by a machine learning algorithm. In other words the text data representation needs to be more suitable for our given classification task at hand. In order to create the features we use two facts about text data. First word stems are considered to be interesting representation units of the text data. Second the concrete ordering of of text data within the document is not very important for a classification task. One example of a possible feature engineering for text data is shown below.

The illustration above shows the transformation of the pure text data into an attribute value representation. Each distinct word corresponds to a feature and the number of times this particular word occurs in the text document is the value of this feature. This typically leads to a high number of dimensions and is not useful given the large feature vector with ten of thousands of features. Therefore other feature engineering methods are often recommended to be used.

First a given threshold for the word count in documents is introduced. This means only words above a certain threshold (e.g. appearing more than three times) are considered as features. Text data also consists often of a wide variety of so-called stop-words (e.g. and, or, etc.) with little meaning and thus words are not considered to be features as well. Further methods often used in text classification include the use of an ‘information gain’ criteria and well known is also the use of the ‘inverse document frequency’.

Learning Models and Algorithms

One efficient learning model for text classification are Support Vector Machines (SVM) using kernel methods. Given labelled datasets for text documents a Radial Basis Function (RBF) kernel or simple polynomial kernel can be used. Another article about ‘Kernels’ in the Big Data Analysis section provides more pieces of information about such powerful kernel methods. For text classification is also a so-called ‘String kernel’ useful.

Other algorithms that can be also used for text classification while we have focussed here on SVMs. Examples are k-Nearest Neighbour, C4.5 decision tree algorithm, the Rocchio algorithm, or a Naive Bayes classifier.

Toolset

The standard tool to work with SVMs is libSVM that automatically includes RBF and polynomial kernels. The libSVM tool page offers an optional string kernel implementation as extra package.

More information about Text Classification

The following video provides an interesting introduction to text classification:

Follow us on Facebook: