Activation Function

Activation function refers to specific function used in a neural network node that is used to compute the output of this node given a specific node input. Computing this function has more impact recently when working with big data and deep learning neural networks such as a Convolutional Neural Network.

Concrete examples of an activation function are Sigmoid, tanh, or ReLU and this article will a provides a short overview of them including their specific properties. The Sigmoid activation function is one of the most used activation functions. It is a nonlinear activation function that enables neural networks to learn complex problems with a relative small number of nodes.

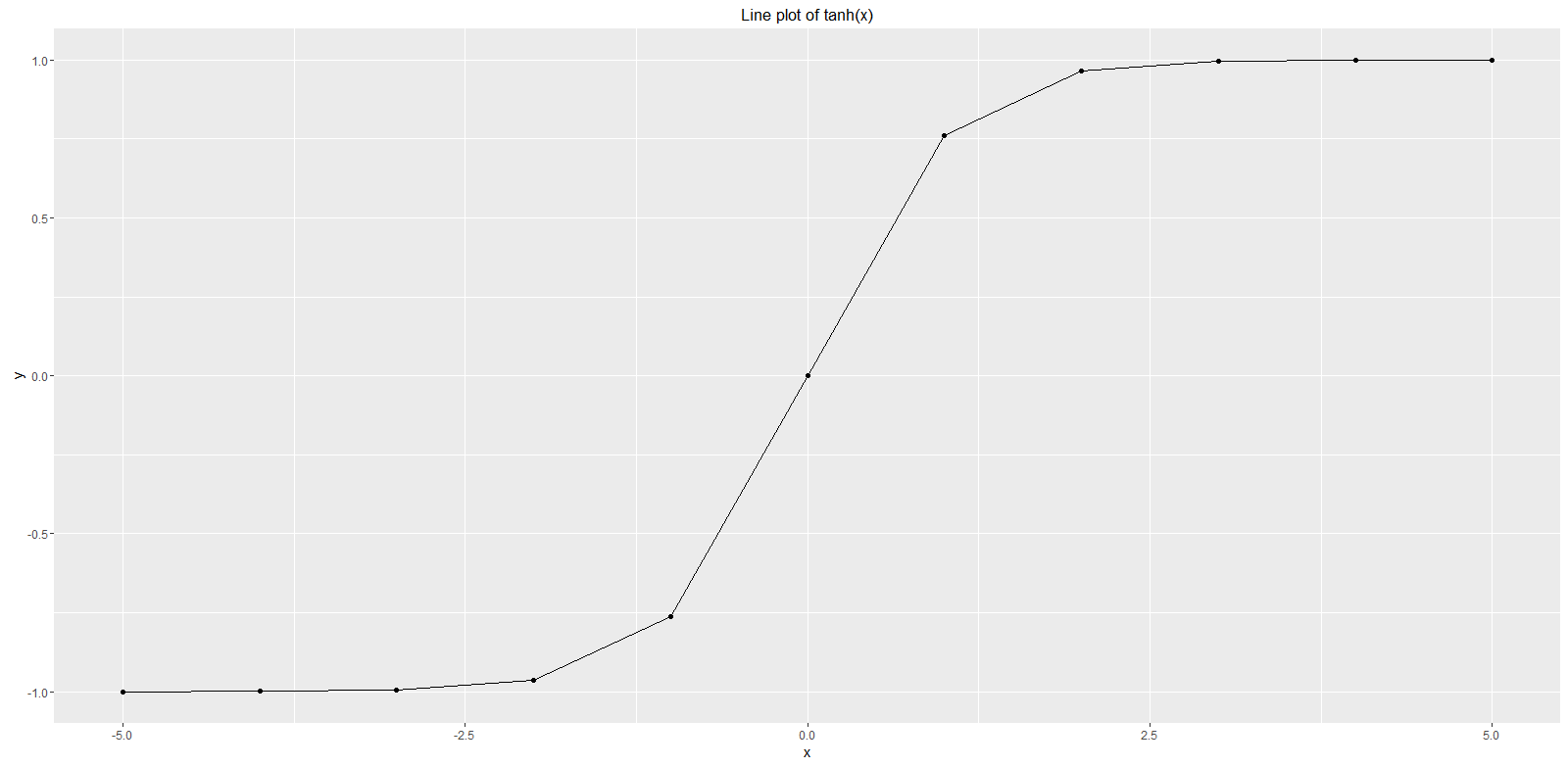

One further example of an activation function is the Tanh that stands for hyperbolic tangent function and it is often used as a non-linear activation function in machine learning algorithms and shown above. The interesting property of this function is that its outputs only range from -1 to 1. Please refer to our article on tanh for more details and a short R tool example of this function.

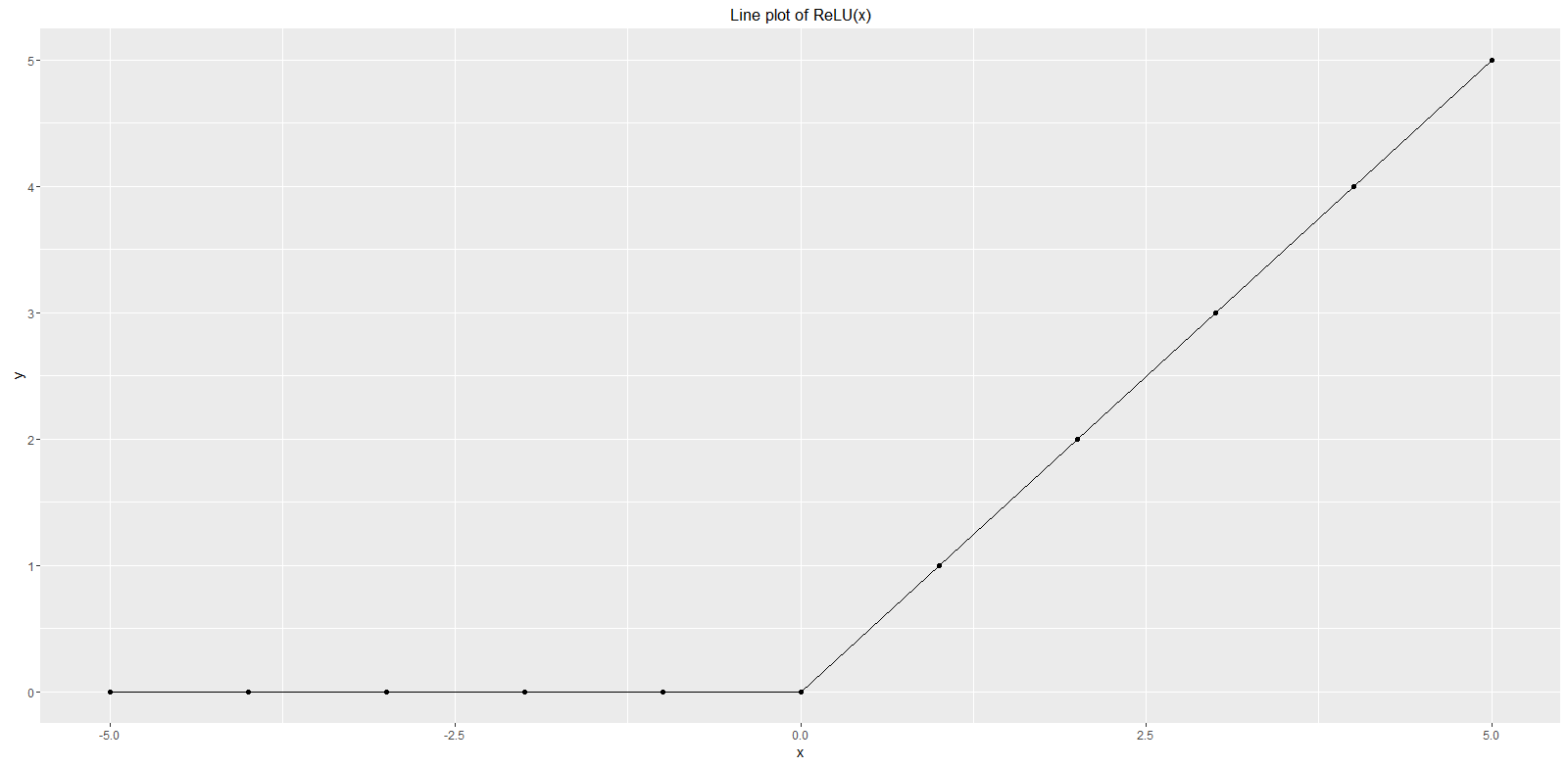

Another example of an activation function is the Rectified Linear Unit (ReLU) that is shown above. The ReLU is defined as f(x) = max(0, x). The interesting property of this function is that its outputs are 0 for negative values of x or x itself in case it is positive. Please refer to our article on ReLU Neural Network for more pieces of information and a short R tool example of this function.

Activation Function Details

Please refer to the following video for more details on this topic: