Recurrent Neural Network

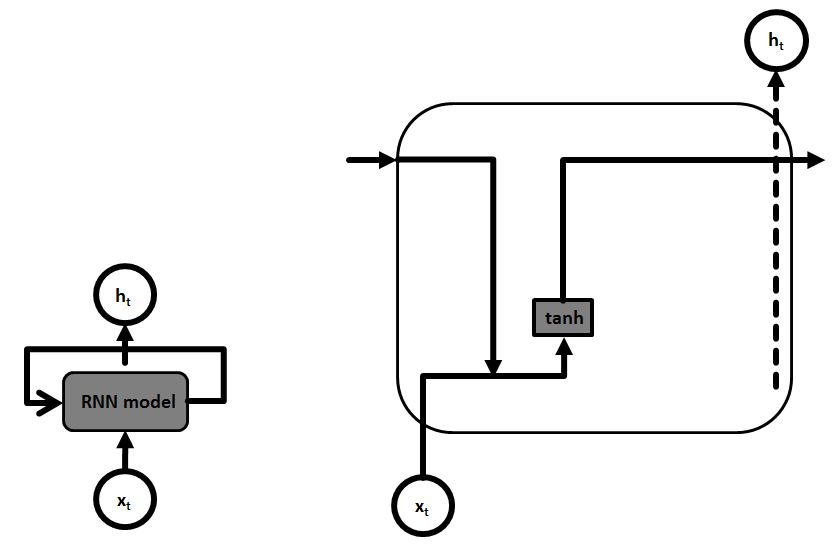

Recurrent Neural Network refers to a specific architecture of an artificial neural network that work well for arbitrary sequence datasets of big data. Such a Recurrent Neural Network (RNN) consists of cyclic connections that enable the neural network to better model sequence data compared to a traditional feed forward artificial neural network. The key idea of these cyclic connections or loops is that they allow for information to persist while training. In other words those loops and cyclic connections enable the network to pass information from one step to the next iteration. The figure below shows the simple recurrent structure (left) and its single tanh neural network layer (right) thus building the basic RNN architecture.

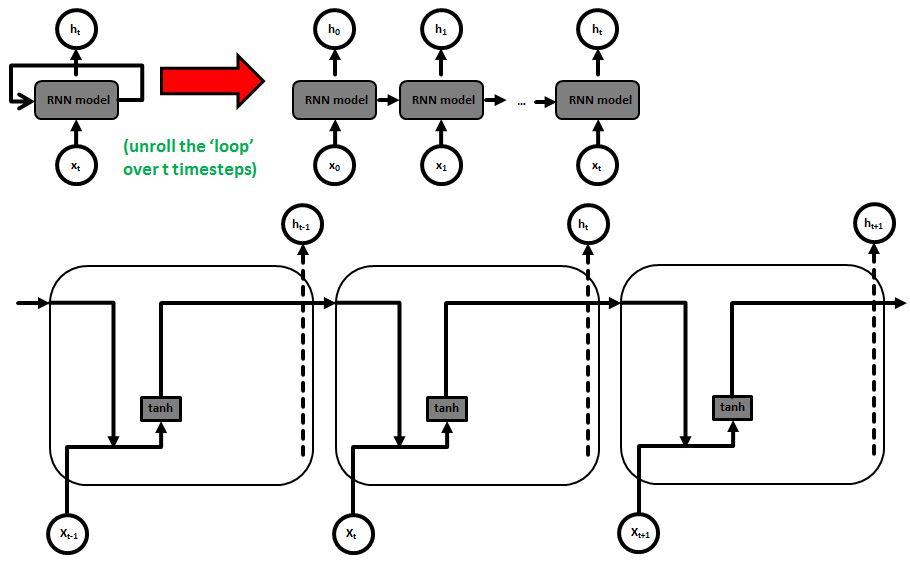

The connections between an RNN form a directed cycle instead of being strictly forward like in a feed forward neural network. But an RNN can also be viewed as multiple copies of the same network, each passing a message to a successor. This gets clear when ‘unrolling the RNN loop’ as shown in the figure below. Note that at each segment of the RNN we work on new inputs from the data sequence xt and the data passed on from the predecessor RNN segment. Overall an RNN and their segments form a chain structure that is capable of working with sequences of data and lists.

The dynamic temporal capability of the RNN technique makes it a key approach in many applications. Selected applications of RNNs include sequence labeling and sequence prediction tasks. Sucessful RNN applications include handwriting recognition, language modeling, language translation, or image captioning. There are different types of RNN architectures and one very often used type of this architecture can be found in our article on long short term memory.

Recurrent Neural Network Details

We refer to the following video about this subject: