LSTM Neural Network

LSTM Neural Network refers to a deep neural network learning technique that learn long-term dependencies in data by remembering information for long periods of time when using big data. The so-called Long Short Term Memory (LSTM) networks are a special kind of Recurrent Neural Networks (RNNs). A traditional feed forward artificial neural networks (ANN) show limits when a certain ‘history‘ is required because each ‘Backpropagation’ forward/backward pass in training starts a new pass independently from the pass before. As a consequence, the ‘history‘ in the data is often a specific type of ‘sequence‘ that requires another approach.

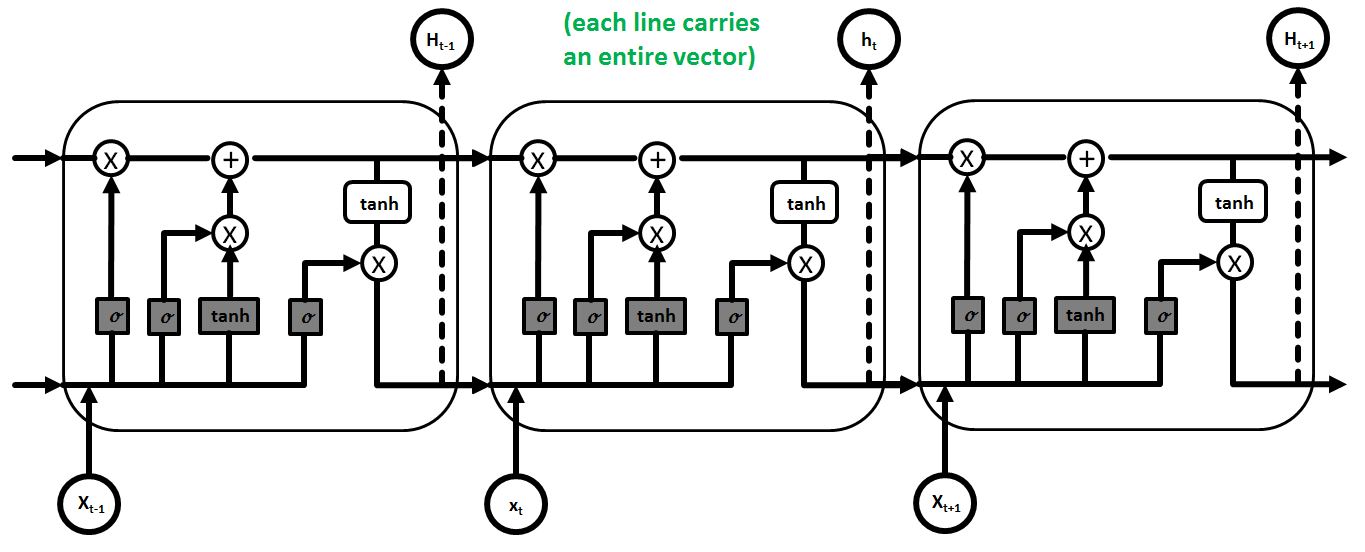

A RNN consists of cyclic connections that enable the neural network to better model sequence data compared to a traditional feed forward artificial neural network (ANN). The RNNs consists of ‘loops‘ (i.e. cyclic connections) that allow for information to persist while training. This repeating RNN model structure is very simple whereby each has only a single layer (e.g. tanh). As special kinds of RNNs, the LSTM chain structure consists of four neural network layers interacting in a specific way. As shown in the figure below, the LSTM model introduces a ‘memory cell‘ structure into the underlying basic RNN architecture using four key elements: an input gate, a neuron with self-current connection, a forget gate, and an output gate.

As shown above, the data in the LSTM memory cell flows straight down the chain with some linear interactions through different time series elements. The cell state Ct can be different at each of the LSTM model steps and modified with gate structures. Linear interactions of the cell state are point-wise multiplication (x) and point-wise addition (+). In order to protect and control the cell state Ct three different types of gates exist in the structure.

LSTM Neural Network Details

Please refer to the following video for more details: